AWS Lambda vs AWS EC2 - Cost Comparison

Let's address the elephant in the room for Serverless.

"Lambda is super expensive at scale, and much more expensive than running EC2 instance(s)."

Yes and no.

The reality is, Lambda is only expensive after a certain requests-per-second threshold (that most apps will never reach).

Another common pitfall is also over-provisioning Lambda capacity (configuring more memory than it needs to complete the operation). Lambda's memory configuration is directly proportional to it's costs. A Lambda configured with 10GB of memory costs 10x more than a Lambda configured with 1GB of memory. This makes total sense, since the same thing applies if you compare running a smaller EC2 instance VS a larger one, but because Lambda makes changing this configuration so easy, it's also easy to over-provision by mistake and skyrocket Lambda's costs.

Coming back to EC2 costs VS Lambda costs, EC2s are billed a predictable amount every hour, from the second you launch them. The cost is linear and predictable.

It doesn't matter how many requests you send the way of that running EC2 instance (even if it's zero), you will always be billed the same at the end of the month. As long as these number of requests fall below the hardware constrains of that EC2 instance.

For example, if every requests consumes 256MB of memory, and the EC2 instance has 1GB of available memory, the EC2 can handle roughly up to 4 requests in parallel before running out of memory.

Whereas with Lambda, the service will keep spawning runtime environments horizontally (think of it as mini-servers) to serve the new parallel requests, beyond the 4th one (up to account concurrency limits).

The benefit is that Lambda approach will ensure no new request ends up rejected (better User Experience). But the downside is, after a certain threshold of "requests per second", the Lambda costs will star to supercede the cost for running the EC2 instance(s).

That threshold is roughly called the "break even" point.

Assuming a steady stream of traffic, depending on how many "requests per second" we are talking about, you might be paying more or less by going with Lambda, instead of EC2 instances.

That's why it's important to understand where that "break even" point resides, in a given fictional month that starts on the 1st and ends on the 30th.

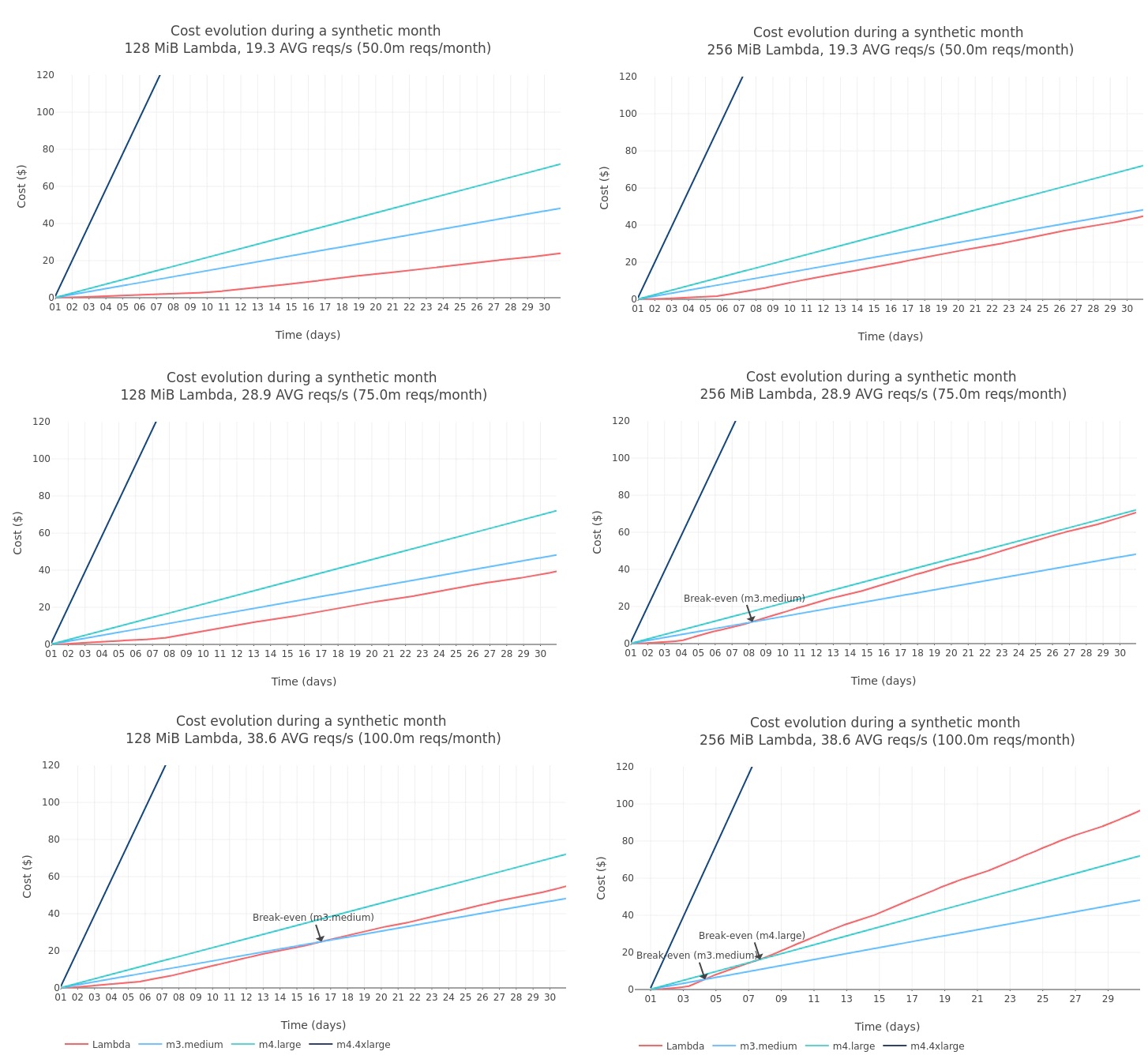

BBVA (the second largest bank in Spain) has conducted a benchmark comparing both AWS services - AWS EC2 and AWS Lambda. The most interesting chart in my opinion is the following:

It compares how are Lambda's costs increasing over the days of the month (the red line), compared to running an EC2 instance of various sizes (the other colored lines).

The dark blue line that skyrockets is a big EC2 instance that hits a cost of $120 just on the 7th of the fictional month, so it's too expensive to talk about.

The greenish line represents a mid-sized EC2 instance (m3.medium) that costs around $70 at the end of the month.

The light blue line represents the smallest of the three EC2 instances, and its cost is roughly $50 at the end of the month.

You probably noticed that all three of the EC2 instances have a linear pricing growth, because their hourly price is fixed and not bound to the number of requests.

The red line is the Lambda costs.

If you observe the first two charts, you will see that, as of the end of a billing month, it's cheaper to use as 128MB memory Lambda if your app has a steady average stream of 19.3 requests per second (first chart) or 28.9 requests per second (second chart).

In the third chart we see that, as your application traffic grows, and you hit 38.6 average requests per second, Lambda, being cost-bound to requests, starts to become more expensive on a monthly basis VS the cheapest of the three EC2 instances. This break-even point is the 16th of the billing month.

On the right side, we have 3 charts, where everything is the same, but the Lambda's memory configuration was doubled (from 128MB to 256MB). This doesn't affect the EC2 instances, which have a fixed memory configuration for a fixed hourly price.

But the Lambda's pricing is hugely influenced by it's memory configuration, so it's important to watch out for misconfigurations and over-provisioning, like mentioned at the beginning.

On the first chart on the right column we see that Lambda is still the cheapest option at 256MB configuration, if your app has a steady stream of 19.3 average requests per second.

However, as you increase the requests per second to 28.9 (and with the doubled Lambda memory configuration), Lambda's costs quickly supercede the cost of the cheapest EC2 instance on the 8th of the billing month.

Increasing the requests per second further to 38.6 means the Lambda becomes more expensive than the cheapest EC2 instance on the 4th of the billing month. It becomes more expensive than the mid-sized EC2 instance on the 8th of the billing month.

In conclusion, we can say that - at big-scale and predictable steady traffic patterns, Lambda is not the right option, if we focus purely on the financial perspective.

However, there are many other axis by which we can compare both services, like maintenance costs (how easy it is to apply security updates or patches) or scalability costs (how quick and easy it is to scale up and down during unpredictable traffic spikes), and others. These are all wider topics that deserve their own analysis, in a separate post.

If cost-saving is your only goal though, pay attention to your app's average requests-per-second and average memory configuration, and pick the right tool for the job.

Large number of requests-per-second and/or large amount of memory needed per request, there's a risk Lambda being too expensive for you, so lean towards EC2.

If the above two are predictable and under control, stick to Lambda, because it's cheaper to run at smaller scale, which most apps fit in.